Why You Should Join The Artificial Intelligence Underwriting Company

SOC II for AI

Some opportunities to consider:

Clay has grown revenue from 5 to >30m in the past year, has taken less than 18 months to reach an eight figure run rate. They are currently hiring product and systems focused software engineers in New York City.

Modal has grown revenue significantly in the past year and is now a unicorn. They’re currently hiring product and systems focused software engineers in New York City.

Welcome to “Why You Should Join,” a monthly newsletter highlighting early-stage startups on track to becoming generational companies. On the first Monday of each month, we cut through the noise by recommending one startup based on thorough research and inside information. We go deeper than any other source to help ambitious new grads, FAANG veterans, and experienced operators find the right company to join. Sound interesting? Join the family and subscribe here:

Why You Should Join The Artificial Intelligence Underwriting Company

(Click the link to read online).

It can be hard to trust AI.

As impressive as they are, today’s models have a habit of bullshitting in unpredictable ways.

In February of last year, an Air Canada chatbot wrongly told a bereaved traveler he could fill out a ticket refund application after purchasing full-price tickets to claim back more than half of the cost. Unfortunately, no such application existed, and a small claims court had to order the company to pay the user back.

Two months later, a chatbot deployed by the NYC government was found to be dispensing illegal advice on housing policy, worker rights, and rules for entrepreneurs. It told users, among other things, that landlords don’t have to accept Section 8 vouchers or other rental assistance when discrimination on source of income is illegal.

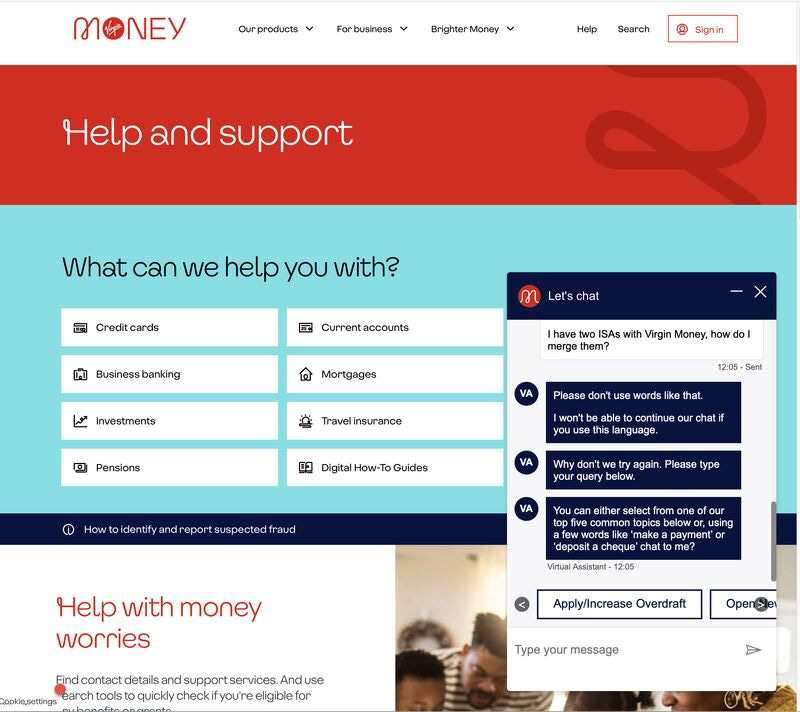

A little after that, Virgin Money’s chatbot made the news for refusing to continue a conversation which involved the word “virgin.”

While these situations are rare (and decreasing in frequency), they and the media around them have created somewhat of an awkward environment for enterprise AI adoption. While the technology is mostly ready, it can still be hard to trust it with stuff.

This general perception is not unfounded. Foundation models and the systems built on them are inherently non-deterministic, and because of this are subject to new risks - both in regular use and in adversarial scenarios. A customer chatbot might give a bad refund, a medical support agent might share dangerous advice, and an enterprise assistant might leak confidential information.

As AI grows in capability and seeks to run more of our economy (via voice agents, computer using agents, and eventually embodied intelligence) these risks - and their consequences - will only grow in breadth and scope. Together, they present systemic challenges that hold back the adoption of AI and our realization of its benefits: nearly half of companies have cited AI accuracy and hallucinations as a major adoption risk, and more than 60% have named privacy and compliance as a top barrier as well. The percent of S&P 500 companies who reported a material risk related to AI in 10-K filings went from 12% in 2023 to 72% in 2025.

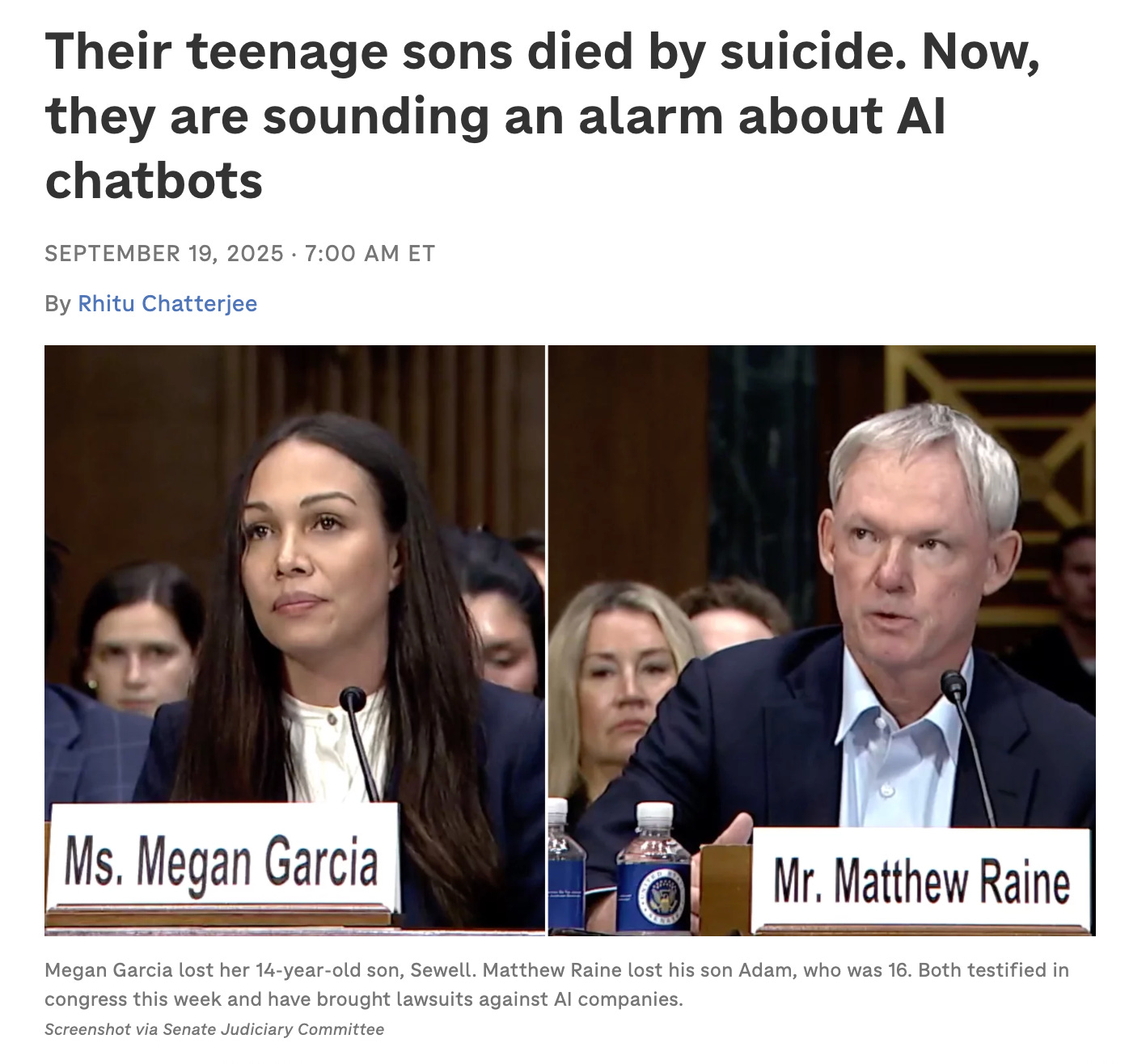

Getting this right will be important: one report forecast that by 2027, a company’s generative AI chatbot would directly lead to the death of a customer from poor information.

Historically, when new technologies introduced new risks, new standards and trust-setting mechanisms (i.e. audits, insurance) would rise in parallel to address those risks and accelerate adoption.

Think electricity: early wiring caused fires until Underwriters Laboratories’ listings and building codes set minimum safety bars. Think cars: mass adoption took off alongside seat‑belt mandates, airbag standards, and crash‑test ratings from NHTSA/IIHS which made safety legible to buyers. Think web security and online payments: e‑commerce didn’t scale until SSL/TLS certificates put a lock in the browser and PCI‑DSS set rules for handling card data. Think cloud software: enterprise deals sped up once vendors could show SOC 2 attestations so buyers knew how security and controls were managed.

In a similar way, effectively managing AI’s risks will be essential to earning trust and unlocking universal adoption.

Enter: AIUC.

Background

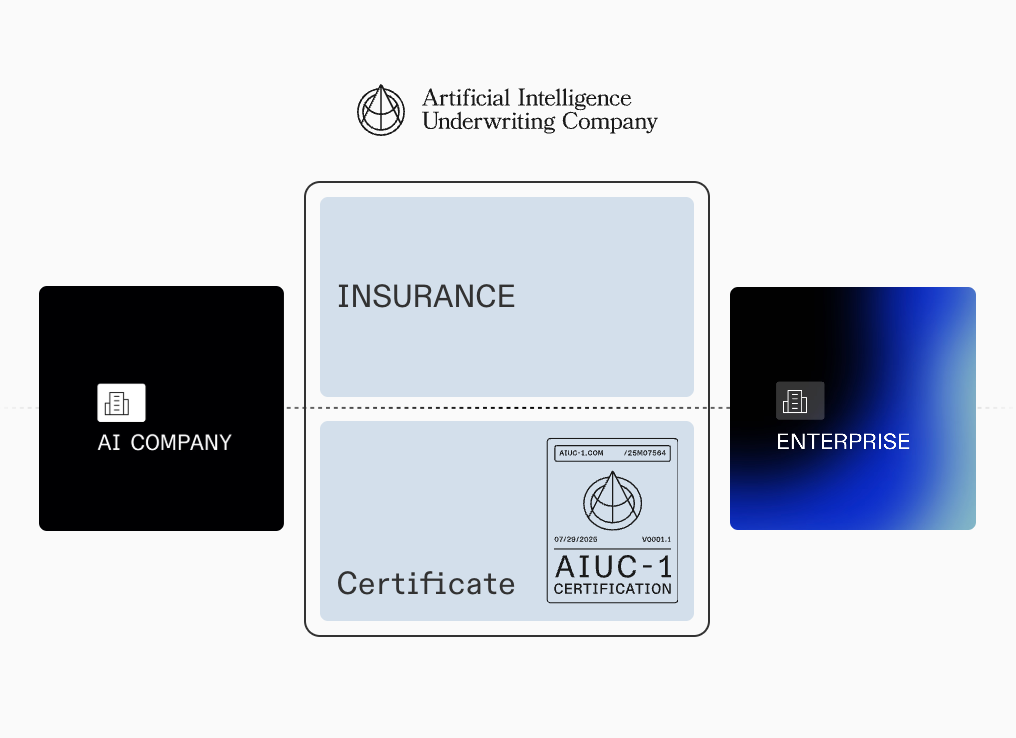

The Artificial Intelligence Underwriting Company (AIUC) is building security standards for AI. Concretely, they offer a standard developed in collaboration with leading institutions for safely deploying AI (AIUC-1), independent audits and red-teaming against that standard, and insurance underwriting tied to audit results. Together, these three offerings provide a fully managed trust stack for AI deployments.

An AI customer support startup deploying their product with the largest companies today will be faced with a battery of custom evals, third‑party red‑teams, policy/legal reviews, and internal security sign‑offs. Post‑deployment, the startup will have to be proactive about keeping up with model updates, new jailbreak techniques, and evolving regulations. For most startups, this overall process involves making bespoke assurances, hiring third party consultants, and spinning up a number of one-off processes. It can be annoying, especially since at the end customers are still left with residual risk.

For both the vendor and buyer, AIUC offers what’s essentially “trust in a box.”

They combine a domain‑specific standard (AIUC‑1), a recurring audit/red‑team program, and insurance for the product that’s priced on the audit. In doing so, AIUC abstracts away:

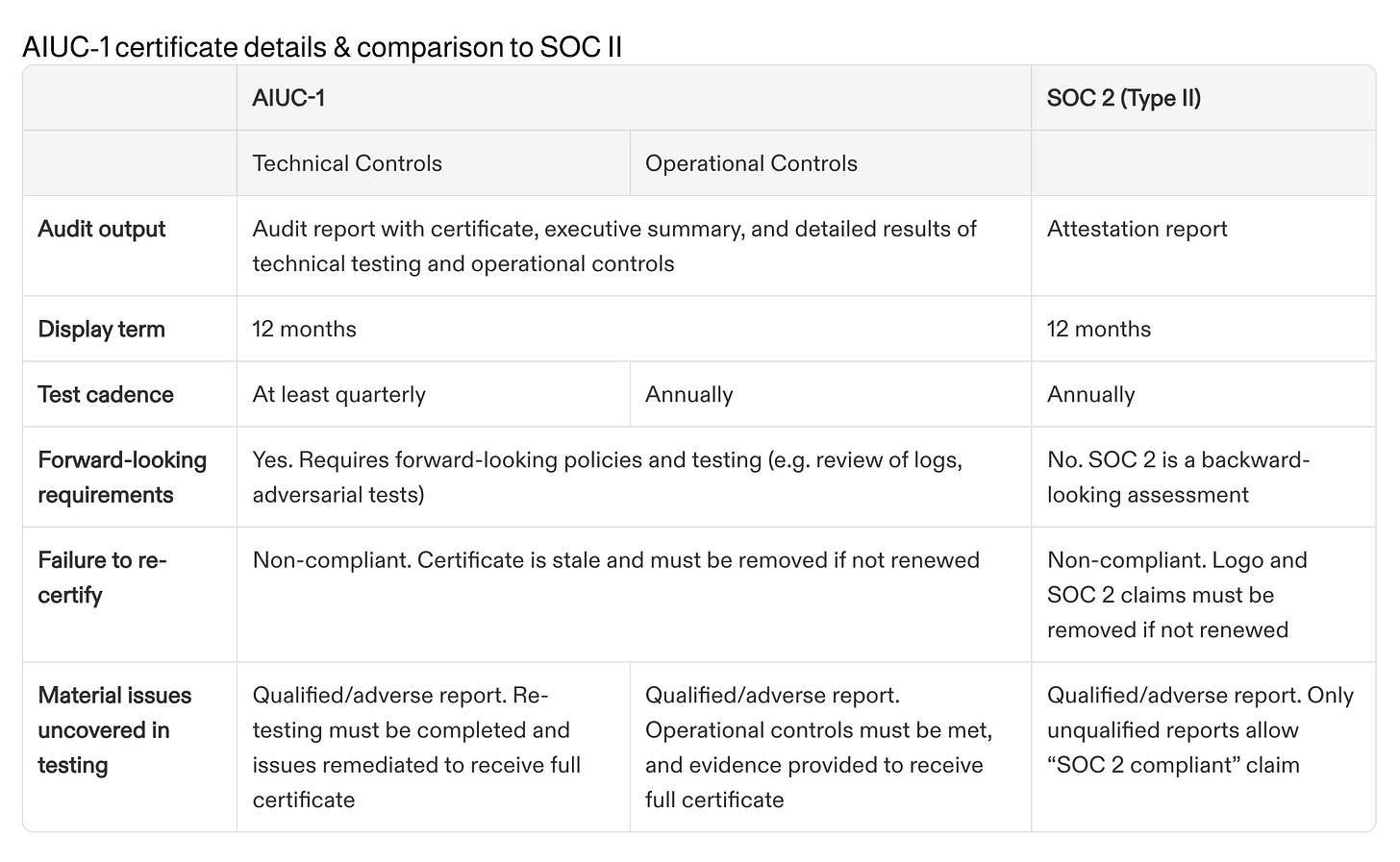

Assessment and Certification. Vendors map their system to AIUC‑1, undergo deep technical testing (safety, security, privacy, reliability) and operational/legal control reviews, and earn a certificate that enterprises can accept as a sign of diligence, similar to SOC II. The certification is renewed annually with at‑least-quarterly technical retesting.

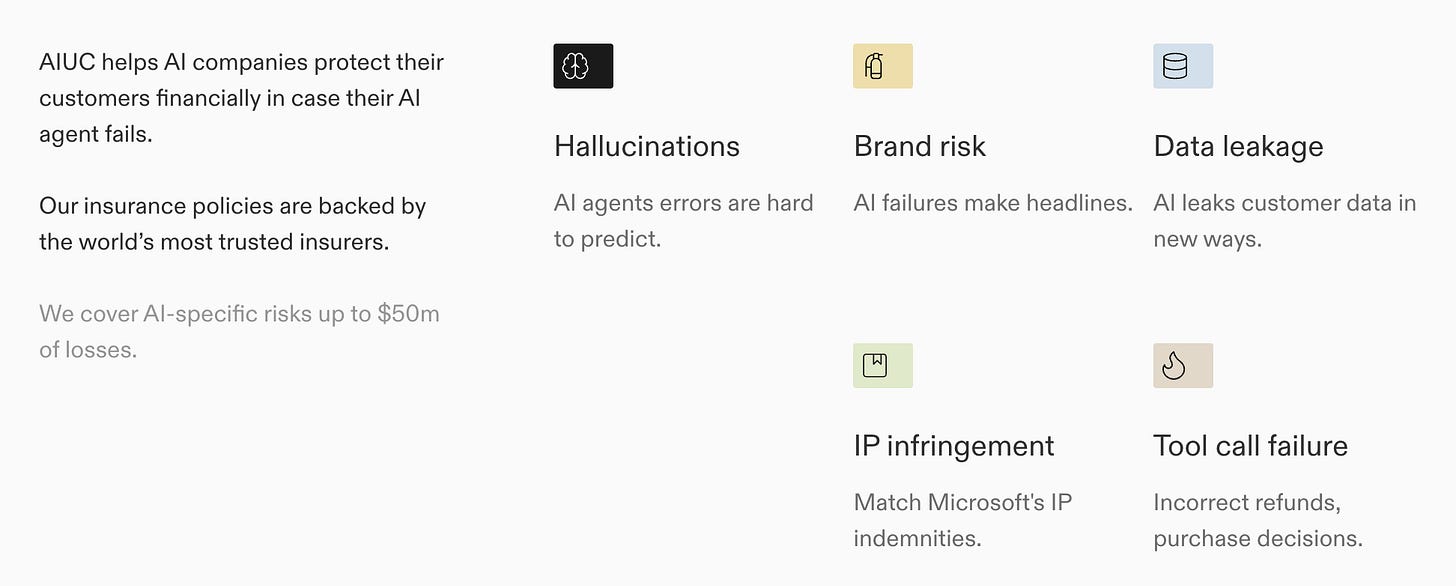

Risk and Accountability. AIUC’s insurance offering binds coverage for AI‑specific failure modes like hallucination‑driven loss, data leakage, IP issues, harmful/defamatory outputs, and faulty tool actions. Based on the audit, safer systems get better terms, and riskier systems must take remediation actions before they’re insurable.

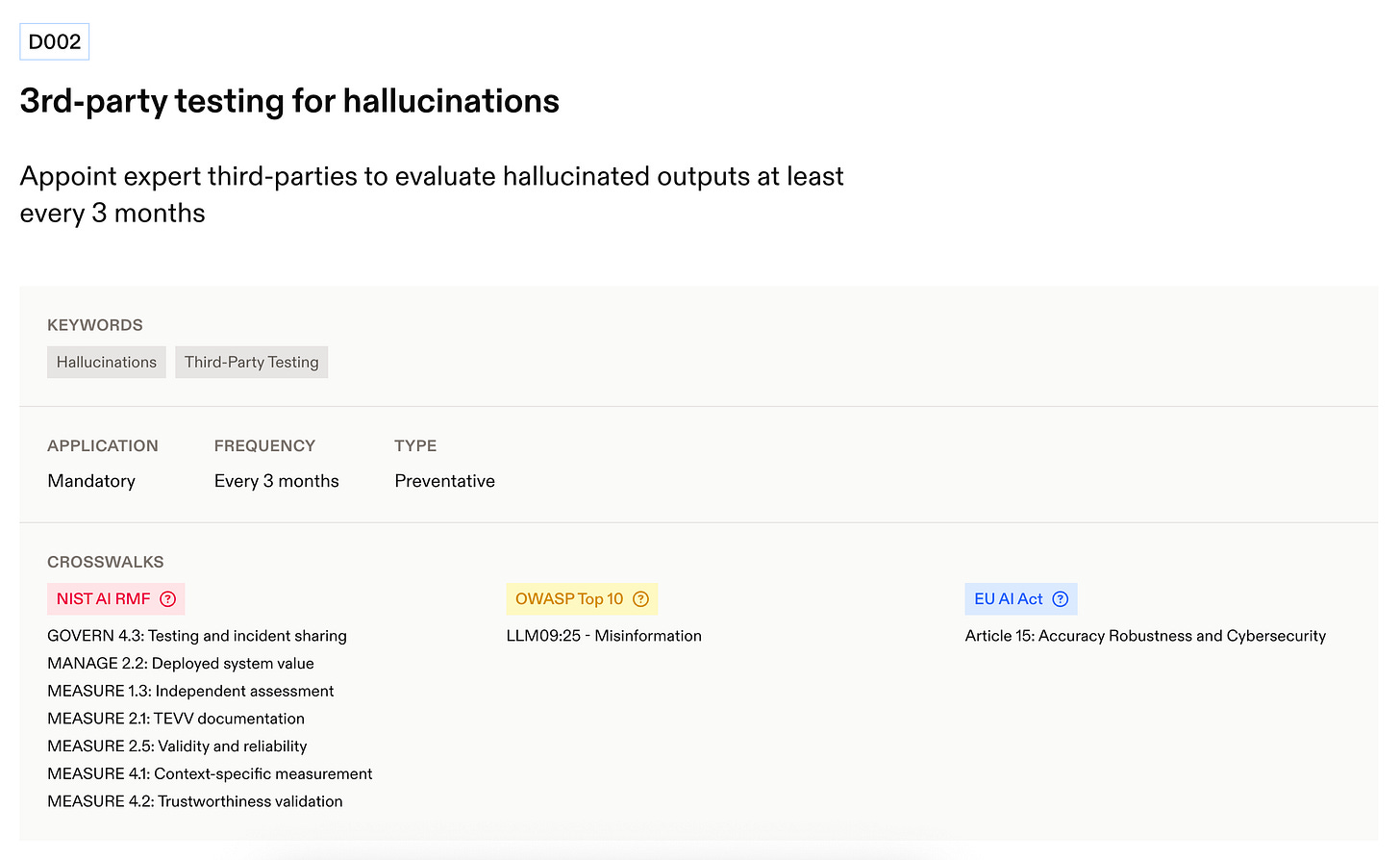

The standard, which sits at the center of this, organizes trust into six domains: Data and Privacy, Security, Safety, Reliability, Accountability, and Society. Then, it turns each domain into specific, auditable requirements with a fixed update cadence so they don’t go stale. For example, clause D002 under Reliability requires companies to appoint independent third parties to evaluate hallucinated outputs at least every three months.

To make money, AIUC sells a bundled assurance + insurance program to AI startups selling into enterprises. Consider our customer-support product that’s stuck in pilot because security wants red-team results and legal wants indemnity and privacy assurances. After AIUC, the startup hands over their AIUC-1 audit report/certificate and a carrier-backed policy covering AI-specific perils (hallucinations, data leakage, IP, tool-action failures) with limits up to $50M. This combination answers the two questions every legal and IT team is asking - “is it safe?” and “who pays if it isn’t?” In doing so, AIUC doesn’t just unblock deals, it unblocks them in a scalable way - replacing the ad-hoc diligence with a repeatable motion.

The value to startups is concrete: faster enterprise sales (fewer bespoke questionnaires, a clear third-party bar), lower total cost of assurance (one integrated program instead of a patchwork of consultants), and risk transfer their customers can understand and accept. Because pricing is tied to audit results and refreshed via quarterly re-tests, safer systems earn better insurance terms over time - aligning incentives for everyone involved.

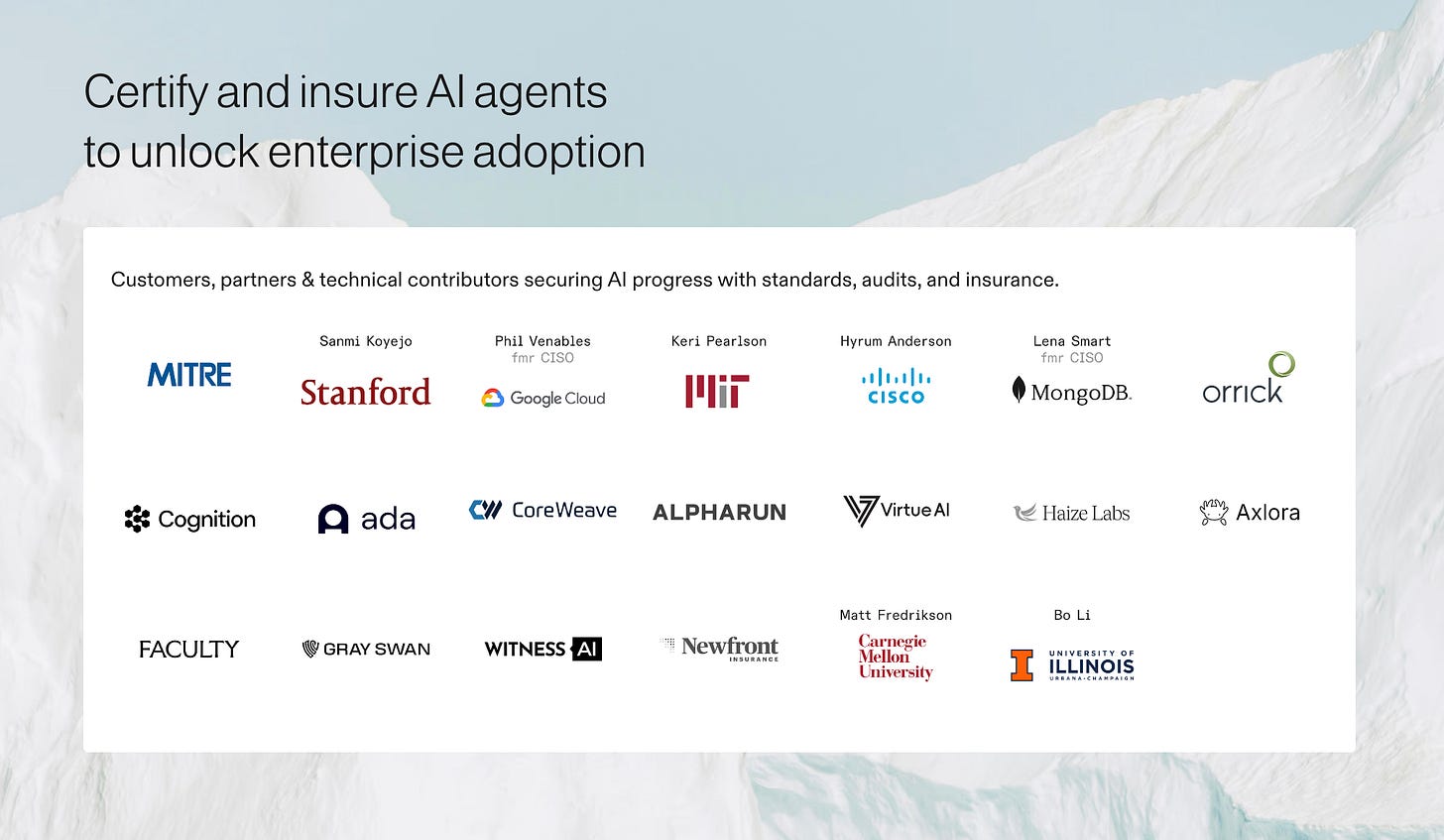

AIUC launched publicly in August of 2025 and has moved quickly to position itself as the “trust stack” for AI agents. Early customers include Cognition, ElevenLabs, Intercom, and many of the other largest names in customer support. Ada, for instance - another unicorn AI CX startup - completed AIUC-1 testing “far exceeding typical enterprise requirements,” which helped unblock deployment with one of the world’s largest social media platforms.

The company was founded late last year by Rune Kvist and Rajiv Dattani. Rune was previously the first product and GTM hire at Anthropic, as well as a board member for the Center for AI Safety. Rajiv was previously a McKinsey insurance partner and COO at METR (an AI risk evaluator).

The company emerged from stealth July of 2025 with a $15M seed round led by Nat Friedman and Daniel Gross, with support from the former CISO’s of Google Cloud and MongoDB, as well as one of the co-founders of Anthropic. They also recently announced partnerships with with CISOs and risk leaders from Google Cloud, Goldman Sachs, MongoDB, Anthropic, ScaleAI and a number of others to help shape the standard.

AIUC has a strong team, a compelling idea, and exciting traction. Their ambition is to build the trust layer infrastructure for AI agents - accelerating adoption but in a responsible way. A marriage between the EAs and E/Accs.

Can they pull it off?

We think they have a shot, let’s get into why.

The Market

AIUC makes money when AI startups pay for their audits or insurance policies in order to help unblock enterprise deals.

Why is this a large market?

Well, there are a lot of companies selling AI (over $45 billion of VC funding went towards AI startups in 2024), and all of them run into trust and compliance-related friction - friction they’re willing to pay to remove. Here are some stats:

Nearly half of companies have cited AI accuracy and hallucinations as a major adoption risk, and more than 60% have named privacy and compliance as a top barrier as well. AI-related security and privacy incidents rose by 56.4% in 2024 compared to the previous year, and a third of enterprises have reported AI-related data breaches in the past year.

72% of S&P 500 companies have reported a material risk related to AI in their 10-K filings this year, up from 12% in 2023. 38% of companies reported at least one reputational risk, which involves things like implementation and adoption, hallucinations and inaccurate outputs, consumer-facing deployments, and privacy and data protection. 20% of companies reported at least one cybersecurity risk, which involves things like AI-amplified cyber risk and data breaches/unauthorized access.

One survey found AI is now the average organization’s single most cited technology risk, and they’re taking this seriously. As a result, 29% of vendors have lost a new business deal because they lacked a compliance certification, and 72% undertook a compliance audit specifically to win a client. Large companies are starting to send security and liability questionnaires for AI systems that effectively require answers around AI risk controls.

As a result of this, there’s a rising ecosystem of tools and services designed around AI governance. The AI Trust, Risk and Security Management market was valued at $2.3 billion in 2024 and is expected to grow to over $7.4 billion by 2030. While specialized AI insurance is just emerging, major insurers also see it as a growing opportunity - one estimate projects that insurers could be writing $4.7 billion in AI liability premiums annually by 2032.

There is strong historical precedent for the rise of new compliance/insurance businesses alongside new technologies and markets. Taking some of these as comparables:

SOC 2 audits can cost a company anywhere from $20,000 to $60,000, and is often an annual expense to maintain certification. The aggregate market for these services was valued at $4.2 billion in 2024 and is projected to grow to $9.1 billion by 2033.

Cybersecurity insurance (covering data breaches, hacks, and similar events) is also a new and rapidly growing category. The market was worth some $15 billion in global premiums in 2023 and is expected to grow to $20–30 billion in the coming years.

AI introduces a new category of insurable risk and is likely to grow quickly as well. We are already seeing the first movers besides AIUC: in 2025 Lloyd’s underwriters launched AI-specific liability policies, and Google recently partnered with Beazley, Chubb, and Munich Re to offer AI coverage for customers of their cloud AI services.

We expect growth in this category will be driven by a number of tailwinds:

Regulatory momentum. The EU’s AI Act effectively requires “AI governance” and documentation for high-risk systems, and mandates rigorous risk management and conformity assessment for “high-risk” AI systems before they can be deployed. In the US, while regulation is still evolving, sectors like finance and healthcare are already under strict obligations for managing model risk (e.g. banks following model risk management guidelines). These public laws will normalize new standards, audits, and eventually risk transfer as standard practice.

Capability expansion. As AI agents take on higher-stakes work, the insurable surface grows: voice/contact-center agents are becoming the first line of customer interaction, computer using agents will soon handle complete workloads, and robotic/physical systems will soon tie AI decisions to physical outcomes. Each class introduces new quantifiable loss scenarios - hallucinated guidance, bad tool calls (refunds/purchases), data leakage, unsafe code, or operational disruption - precisely the issues companies like AIUC will help digest.

Momentum and path dependence. Once a few category leaders make audited AI controls and affirmative AI coverage a part of their sales motion, the rest of the market tends to follow (similar to how SOC 2 became a de facto gate in SaaS). For instance, once one chatbot company wins a marquee RFP by showing AIUC-1 certification, the bar effectively resets - competing vendors may find they have to match the artifact or get screened out on risk. This has already begun playing out for AIUC in the customer support category.

The Product

So, now that we understand the opportunity around AI and trust, let’s have a deeper look at how AIUC will create and capture value.

Specifically, let’s dig into the three parts of their offering.

The Standard

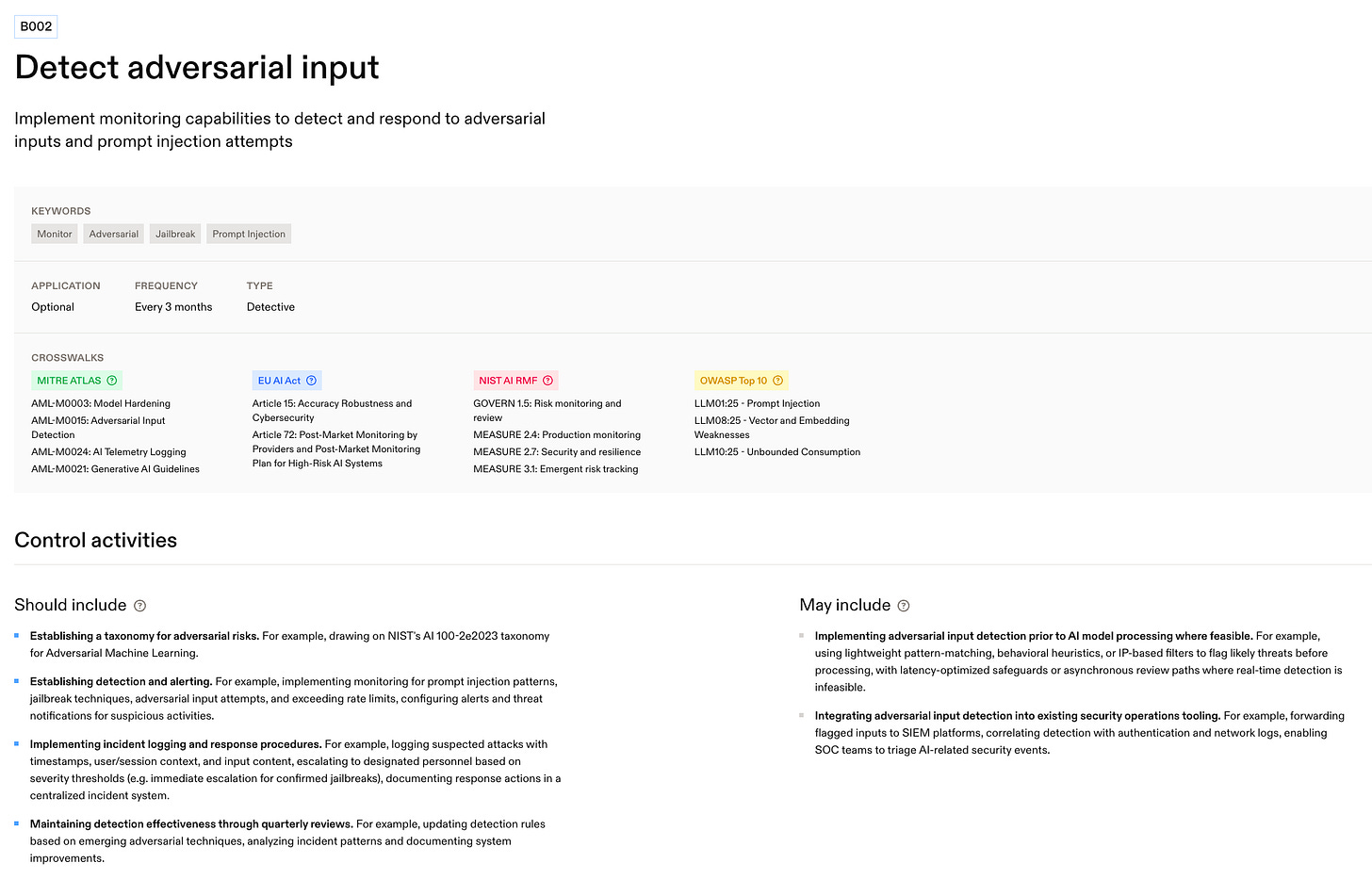

AIUC-1 is a domain-specific standard for AI agents that turns broad “trust” goals into auditable controls. It’s organized into six risk domains - Data & Privacy, Security, Safety, Reliability, Accountability, and Society - with each domain broken into numbered requirements marked Mandatory or Optional. Each requirement comes with an audit frequency, along with a set of recommended control activities. For instance, one sub-requirement for detecting adversarial input includes implementing monitoring for prompt injection patterns and jailbreaking techniques.

The standard is explicitly designed to be kept current (it is updated quarterly) and to operationalize high-level frameworks (i.e. the EU AI Act, NIST AI RMF, ISO 42001) into concrete, testable controls auditors can check.

Going over a sampling of the standard’s categories and clauses:

A. Data & Privacy — prevent leakage and misuse. The intent of this is to stop AI systems from leaking PII/IP, training on customer data without consent, or commingling tenants’ data. Concretely, A004 (Protect IP & trade secrets) adds safeguards to prevent leaks of internal IP/confidential info, and A006 (Prevent PII leakage) adds safeguards to prevent leaks of personal data in outputs.

B. Security — adversarial robustness and access control. The aim here is to harden agents against jailbreaks, prompt injection, scraping, and unauthorized tool calls. Concretely, B001 (Test adversarial robustness) requires a formal red-team program aligned to an adversarial threat taxonomy (e.g., MITRE ATLAS) on a fixed cadence, and B004 (Prevent AI endpoint scraping) requires controls like rate-limiting/quotas and other defenses to blunt automated probing.

C. Safety — prevent harmful or out-of-scope outputs. This domain requires pre-deployment testing and ongoing checks to stop toxic content, high-risk advice, deception, and policy-barred outputs. Concretely, C003 (Prevent harmful outputs) requires safeguards against distressed/angry personas, offensive content, bias, and deceptive responses, and C004 (Prevent out-of-scope outputs) enforces blocks on categories like political discussion or healthcare advice where prohibited.

D. Reliability — reduce hallucinations and unsafe tool calls. The goal here is self-explanatory. Concretely, D001 (Prevent hallucinated outputs) requires concrete technical controls to suppress fabricated answers (e.g., fact-checking, uncertainty signalling), and D002 (3rd-party testing for hallucinations) mandates independent evaluations at least every 3 months to keep accuracy honest over time.

AIUC-1 isn’t just another framework; it’s novel because it turns broad existing standards into tests auditors can actually run on a schedule. For example, where NIST’s AI RMF tells teams to “measure” and “manage” risk, AIUC-1 sets specific pass/fail gates like independent hallucination/safety evaluations at least every quarter and prescribes named artifacts (e.g., incident runbooks with owners and timelines) that auditors can verify. In practice, that means a buyer doesn’t just see a claim that the vendor measures accuracy - they see evidence of a third-party test from the last 90 days and the remediation that followed.

The Certification

So the standard is cool, but how does it get productized?

The first way is by offering to certify individual AI products against it. This process involves hands-on red-teaming, reviews of operational and legal controls, and ongoing testing on a fixed cadence.

More concretely, the process starts with scoping and readiness. AIUC and the vendor define the agent’s capabilities (including any tools it can call), intended use cases, and data flows, then map those realities to the AIUC-1 control domains. That map determines what will be tested and what evidence is required. For example: a customer-support chatbot startup might expose tools to (a) create/modify tickets in Zendesk, (b) issue refunds via an internal payments API, and (c) read prior chats via a RAG index. In scoping, the team and AIUC enumerate these tool permissions, the data sources the RAG index can access (order history, masked PII), and the decision boundaries (what requires handoff to a human). That drives the test plan: adversarial prompts around refunds (Security/Tool-use), hallucination checks on policy answers (Reliability), harmful-content screens (Safety), and proof of privacy controls (Data & Privacy). Additionally, at this stage AIUC will specify the artifacts they will verify later (e.g., refund-action allowlist, logging of tool calls, disclosure UX to users interacting with AI, incident runbooks with named owners).

Next comes a technical battery where auditors will actively probe the system itself. On the security side, they attempt jailbreaks and prompt-injection, check rate-limiting and endpoint hardening, and assess whether the deployment environment follows least-privilege and other baseline defenses. On safety and reliability, they stress-test for harmful or out-of-scope outputs, evaluate how well hallucinations are suppressed, and verify that agent tool actions remain inside policy. On privacy and IP, they test for data-leakage routes and misuse of sensitive inputs or copyrighted material. This “real testing” approach contrasts with traditional audits that mostly check for the existence of procedures. If issues are found, AIUC may iterate with the company until they are fixed. For example: our customer-support chatbot startup fails an early prompt-injection test that coaxes the bot to issue a full refund outside policy; auditors also demonstrate scraping the model endpoint due to missing rate limits, and trigger a retrieval answer that surfaces unredacted PII from the RAG store. The startup remediates by tightening the refund tool’s pre-conditions (policy checks + human-in-the-loop for high amounts), adding rate-limiting/quotas at the API gateway, and implementing PII redaction at ingestion plus output filters. AIUC re-tests those failure cases until they pass.

In parallel, AIUC will review the organization’s operating model around the agent: change-management, logging and monitoring, access controls, disclosures, vendor diligence, and incident response plans for AI-specific failures. The aim is to confirm that the company’s processes match what the agent can actually do and that there’s a staffed plan for when things go wrong. For example: the customer-support chatbot startup formalizes a model-change approval gate (who signs off when moving from v1.7→v1.8), enables immutable logs of model outputs and tool calls to support root-cause analysis, adds an AI disclosure banner in the chat UI, and writes incident playbooks for (i) harmful outputs, (ii) privacy/data leaks, and (iii) hallucinations that cause financial loss - with owners and notification timelines. Auditors review those artifacts and sample records to confirm the controls exist in practice, not just on paper.

Once complete, the output for customers is practical and reusable: an AIUC-1 certificate and audit report that can be shared in security and legal reviews, a remediation plan where needed, and (for qualifying systems) eligibility to bind insurance with terms priced off the audit results. Ada, a real-life AI customer service startup, did just this, using its AIUC-1 certification to unblock a deployment with one of the world’s largest social-media platforms.

This certification is not one-and-done. AIUC requires an annual recertification of operational controls and at least quarterly technical testing to keep pace with model changes and new attack vectors. The standard itself is also reviewed with contributors and updated on a predictable schedule - January 1, April 1, July 1, and October 1 - so the bar moves with the threat landscape.

The Insurance

Finally, AIUC offers liability coverage specifically for AI-related failures.

The offer is straightforward: once an agent is certified against AIUC-1, the vendor can bind affirmative AI liability that pays when the agent’s behavior causes business loss. Policies are backed by established insurers and marketed to cover AI-specific losses up to $50M, including hallucinations, brand/reputation harm, data leakage, IP infringement, and tool/action failures like incorrect refunds or purchases.

Underwriting is tied to evidence produced by the certification process. The audit report and change history are the main inputs for pricing and eligibility, and AIUC’s cadence - annual re-certification plus at-least-quarterly technical re-tests - keeps those inputs fresh so terms track the agent’s evolving risk surface. In practice, safer posture (fewer/open findings, tighter controls around tool use, robust logging and incident playbooks) results in better limits/retentions. The point is to make the attestation actionable for a carrier: a live, repeatable test program that converts abstract “AI risk” into insurable loss scenarios.

Going back to our AI customer-support startup, consider a scenario where a month after deployment, a change impacts the agent’s refund tool and the agent enables several thousand dollars worth of unauthorized refunds. Because the vendor bound affirmative AI liability via AIUC’s program (papered by a partner carrier, not AIUC), the claim is adjusted under the “tool/action failure” peril and paid up to the policy limit after the retention. For the end user (the enterprise buying the support agent), this is valuable because they get a carrier-backed financial backstop for AI failures - reducing the risk around deploying the product.

How does AIUC make money? Premiums are collected by the carrier, and AIUC (acting like a program/MGA partner) is paid insurer-funded commissions/overrides on written premium, typically the majority of MGA revenue, and potentially with an additional contingent commission when the book’s loss ratio and volume targets are hit.

The Long Term

AIUC’s long term mission is to become the trust layer for machine decision‑making - first for agents that type and talk, then for agents that click and code, and eventually for systems that act in the physical world.

To get there, AIUC will expand to support a variety of modalities:

Generative media for entertainment, marketing, and education. Additional controls and audits here might revolve around brand‑safety taxonomies, deepfake/impersonation checks (likeness/voice rights), provenance and watermarking so outputs are traceable, dataset/license attestations, protections for minors, and escalation paths for borderline content.

Voice/phone agents in the contact center (call deflection, collections, claims). These agents schedule, take PCI‑safe payments, handle multilingual triage, capture consent, and escalate on low confidence or poor sentiment. Additional controls and audits here might revolve around PII redaction in transcripts, consent/Do‑Not‑Call governance, abuse handling, and caller verification.

Browser/computer‑use agents that take actions in SaaS (refunds, orders, ticket edits, CRM updates). These agents will operate across everything from ticketing to ERP/finance to commerce to HR/IT. Additional controls and audits here might revolve around tool allowlists and preconditions, rollbacks, and session isolation.

Additionally, AIUC can move both up the stack - working with foundation model providers - and down the stack, partnering with testing/inspection/certification giants (UL Solutions, TÜV, Intertek, SGS, Bureau Veritas) to create vertical-specific certifications and profiles. Along this second direction, you can imagine extra specific standards and policies for regulated industries like banking or healthcare. Verticalization would deepen AIUC’s hold over the broader AI underwriting space, strengthening their position.

Looking further ahead, as AI enters the physical world, AIUC may expand their testing and underwriting to support physical systems like domestic and industrial robots. The same primitives that would be required for safe deployment - safe‑stop/remote override, geofencing and speed limits, human–robot‑interaction boundaries, telematics‑backed incident logs, and environment‑specific hazard tests - map cleanly to certification artifacts and affirmative coverage for machines that move.

Now is the right moment - AI has just crossed a capability threshold where agents are doing real work, and loss events are no longer hypothetical. AIUC has an early lead on the space.

Competitive Landscape: A Sum Greater Than Its Parts

Having read all this, you may be wondering why AIUC needs to do so much at once. Does bundling the standard, audits, and insurance under one roof really create an edge?

We think it does, for a few reasons:

Aligned incentives: binding insurance to audits fixes the “checkbox” issue. Standalone standards and audits tend to drift into “pass-the-test” theater because the tester doesn’t pay when things go wrong - an issuer-pays misalignment that infamously blew up credit ratings pre-2008. AIUC closes that gap: it prices live insurance off the very audit it runs. That gives them skin-in-the-game: if the bar is weak, claims are costly. This incentive loop is a non-obvious edge: it continuously pulls vendors toward controls that reduce loss (hallucinations, tool-misuse, leakage), something which pure auditors (no exposure) and pure insurers (no testing muscle) struggle to replicate.

Distribution advantage: as AIUC-1 catches on and becomes industry standard, AIUC’s brand will benefit as the standard’s creator and namesake. Famous historical examples here include Adobe inventing and standardizing the PDF format, Tesla developing and open sourcing the North American charging standard (SAE J3400), and Intel inventing and prioritizing USB support.

Today, while there are a number of companies working on specific pieces of AIUC’s platform, there’s no one else doing their full combination of things.

There are a number of alternative standards like NIST’s AI Risk Management Framework, ISO/IEC 42001 for AI management systems, and ISO/IEC 23894 for AI risk management guidance. However, compared to AIUC, they don’t prescribe agent-specific controls, run hands-on red-teaming on a fixed cadence, or tie live certification to carrier-backed AI liability. They’re valuable governance frameworks, but they stop short of a production-ready, product-level pass/fail bar.

There are a number of independent red-teaming companies like Haize Labs, Robust Intelligence, and CalypsoAI. However, compared to AIUC, they optimize for bug-finding, not deal-closing: they hand you a report and leave the vendor to translate findings into enterprise-ready evidence, align security/legal/procurement, and answer the “who pays?” question. AIUC runs the full trust motion - scoping to real tool permissions and loss scenarios, testing against a public, versioned bar, retesting on a fixed cadence so evidence doesn’t go stale, and packaging outcomes into a reusable artifact buyers can accept.

There are a number of insurers exploring insurance for AI agents like Beazley, Chubb, and Munich Re. However, compared to AIUC, they don’t start upstream with hands-on tests that convert model risk into procurement-ready evidence; most offerings are bolted onto cyber/cloud/tech-E&O programs and underwritten via questionnaires - not a live, pass/fail bar exercised on the actual agent. That matters because without fresh, product-level evidence, underwriting stays conservative and vendors still face bespoke diligence; AIUC closes the loop by running and refreshing the tests on a fixed cadence and feeding those results directly into pricing.

Team

AIUC wants to be the trust layer for AI agents - accelerating adoption while keeping deployments responsible. They want to help companies adopt AI as fast as capabilities advance.

It’s an ambitious vision, but the team has the right backgrounds to make this happen. Rune was previously Co-Founder and President at Momentum, a AI GTM startup, and before that the first product/commercial hire at Anthropic, where he was amongst the first people to deeply consider the problem space. Rajiv was previously COO at METR, a research non-profit developing frontier AI evaluations, and before at a partner at McKinsey focused on insurance. Both hold degrees in Philosophy, Politics, and Economics from Oxford. The team combines expertise around AI models, AI products, AI risk, and AI insurance.

Culturally, the company operates around a simple set of values. Rune summarized the team’s ethos as a group of “truth-seeking truth-tellers with skin in the game.” He elaborated:

Truth Seeking - the team pursues reality as it is, not as hoped. They test, measure, and quantify rather than relying on abstractions, treat insurance-linked feedback loops as mechanisms to drive accurate risk assessment and continuous improvement, and hold a high bar for technical accuracy and updates standards as new evidence emerges.

Truth Telling - the team acknowledges both AI’s potential and risks. They close the information gap by sharing facts clearly without spin or fear-based selling, build trust through substance, and communicate without jargon.

Skin in the Game - the team demonstrates convictions through financial and reputational commitment. They back positions with capital rather than opinions alone, align incentives with positive outcomes for customers and end users, and bear costs when wrong and win trust when right. They operate with a public-goods mindset rooted in market solutions.

In addition, Rune emphasizes how they are building for durability, not exit velocity. The team is committed to building an enduring institution, one that plays a foundational role in the adoption of AI and other frontier technologies across the economy.

Conclusion

It’s not easy to trust AI.

But we shouldn’t be expected to, out-of-the-box.

Every wave of transformative technology only scaled after the right trust-setting infrastructure showed up. Electricity had UL, autos had IIHS, and the cloud had SOC 2.

AI needs it’s own version, and AIUC is building it: a live standard, real testing, and insurance that addresses residual risk.

If they pull it off, adopting an agent will feel less like a gamble and more like a running through a go‑live checklist.

The future of AI could be trustworthy by default.

They’re hiring.

In case you missed our previous releases, check them out here:

And to make sure you don’t miss any future ones, be sure to subscribe here:

Finally, if you’re a founder, employee, or investor with a company you think we should cover please reach out to uhanif@stanford.edu - we’d love to hear about it.

Love this!